Translate this page into:

Objective structured practical examination (OSPE) for formative assessment of medical undergraduates in Biochemistry

Correspondence to SHILPA SUNEJA, Room No. 218, 2nd floor, College Building; shilpasuneja@rediffmail.com

[To cite: Suneja S, Kaur C. Objective structured practical examination (OSPE) for formative assessment of medical undergraduates in Biochemistry. Natl Med J India 2024;37:261–6. DOI: 10.25259/NMJI_517_2022].

Abstract

Background

Assessment is a process that includes ascertainment of improvement in students’ performance over time, motivation of students to study, evaluation of teaching methods and ranking of students. Despite the new competency-based medical education, assessment remains a largely untouched aspect. Most medical colleges still follow the conventional practical examination (CPE) methods that raise concerns about examiner variability, standardisation, and assessment uniformity. Objective structured practical examination (OSPE) includes objective testing through direct observation, knowledge assessment, comprehension, and skills. We studied the feasibility and acceptability of OSPE as a method of formative assessment of practical skills in biochemistry and to determine faculty and student perception of OSPE as an assessment tool.

Methods

Phase 1 MBBS students of the 2020–21 batch of Vardhman Mahavir Medical College and Safdarjung Hospital, New Delhi, were divided into two groups of 85 students each. The first group was assessed for Competency-1 through CPE and the second group was assessed for the same competency through OSPE. These students were then crossed over. The first group was now assessed for Competency-2 through OSPE and the second through CPE for the same competency. The process was repeated for the third and fourth competencies. Thus, two crossovers were performed with four OSPEs and their corresponding CPEs. The mean scores of the students were compared using both assessment methods, using an unpaired student ‘t’ test. Bland–Altman analysis was done to compare differences between OSPE and CPE. Student and faculty feedback was collected on a 5-point Likert scale for close-ended questions, and a thematic analysis of open-ended questions was done.

Results

When assessed with OSPE, students’ mean scores were found to be significantly higher (p<0.001) than CPE. Cronbach alpha of the questions administered had high internal consistency with a score of 0.83 for students and 0.89 for the faculty.

Conclusion

OSPE can be used for formative assessments in undergraduate medical students in biochemistry as it is feasible and acceptable to both students and faculty and brings a level of objectivity and structure to the assessment process.

INTRODUCTION

Medical education is experiencing a broad re-evaluation with a major focus on the assessment of medical students. Assessment is the most important determinant of what students learn. It also serves as a tool for manipulating the whole education process. Assessment should be focused on the student’s potential for incorporation, application, and use of knowledge, and all the components should be considered in a scoring pattern.

The conventional practical examination (CPE) involves writing detailed procedures for the given experiments, followed by unobserved performance on the topic in the form of a viva voce.1,2 This assessment method has several problems, especially in terms of outcome. It does not allow the examiner to assess the student’s skill, and scoring may be subjected to the examiner’s bias. Although marking should depend only on the student’s competence, variability in examiners and experiments selected affect grading in CPE. Moreover, the scores reflect the overall performance rather than individual competencies.3 Further, the subjectivity involved in CPE also affects the correlation between marks awarded by different examiners and the performance of the same candidate.4

In objective structured practical examination (OSPE), the process and the product are tested, emphasising individual competency. This prevents student and faculty variability, thus improving the validity of the examination.5

We felt that a structured approach to assessing practical skills in biochemistry is needed to provide strategies that faculty can use to enhance skill performance and increase training efficiency while assessing several objectives that must be implemented for conducting the practical assessment in biochemistry. To fulfill the desired targets, newer assessment methods, including OSPE, have been sought.

We evaluated the feasibility and acceptability of OSPE as a formative assessment tool for practical skills in biochemistry and compared the performance of medical undergraduates in OSPE and CPE. We also explored student and faculty perceptions regarding the use of OSPE as a learning and assessment tool in biochemistry.

METHODS

This prospective study was done in the Department of Biochemistry at Vardhman Mahavir Medical College and Safdarjung Hospital, New Delhi, India. OSPE was used as a formative assessment tool for Phase 1 MBBS students in Biochemistry of the 2020–21 batch. It included 170 students and 6 faculty members. The study was conducted after approval from the Institutional Ethics Committee.

Feedback questionnaires for the faculty and students were prepared, peer-reviewed and validated by experts. The questionnaire for students was prepared on a 5-point Likert scale and had open-ended questions. The statements were framed in cognitive, psychomotor and affective domains. Two open-ended questions were included to note their perceptions about OSPE. A separate validated questionnaire with a similar pattern was also designed for feedback from the faculty. Cronbach’s alpha of the questions administered had internal consistency, with a score of 0.83 for students and 0.89 for the faculty.

Identification of competencies

The new competency-based medical education (CBME) curriculum has defined the list of practical competencies which each student has to perform as a part of the curriculum for biochemistry.6 Five competencies were selected from the list by 3 rounds of the Delphi technique involving 6 faculty members from the department of biochemistry. In the first round, 8 competencies were shortlisted, followed by 6 in the second round, and 5 were finalised in the third round. These were used to compare the assessments by CPE and OSPE. The competencies selected for formative assessment were: (i) identify abnormal constituents in urine, interpret the findings, and correlate these with pathological states; (ii) demonstrate estimation of serum creatinine and creatinine clearance; (iii) demonstrate estimation of serum proteins, albumin and albumin:globulin ratio; and (iv) demonstrate estimation of glucose in serum.

Planning and implementation

Permission was taken from the Dean of the Institute and Head of the Department to conduct this study. A core committee of 6 faculty members from the Department of Biochemistry conducted an orientation programme for all the faculty and residents involved in designing or conducting OSPE.

A pilot OSPE was conducted with a group of 14 student volunteers (not included in the study) to check the feasibility and reliability of the study tool. All the allotted topics were taught to students earlier in their practical classes.

Examinations were announced to students 15 days in advance. Adequate instructions about the pattern of examination by CPE and OSPE were given to the students.

Protocol for assessment through

CPE CPE in Biochemistry (40 marks) consisted of 2 components: practical exercises (25 marks) and a viva voce related to the theory topics covered during the term (15 marks). The practical exercise consisted of qualitative/quantitative experiments with procedures, calculations and interpretations.

Protocol for assessment through

OSPE OSPE stations were designed, peer-reviewed and expert-validated. Peer-agreed checklists for procedure stations, structured questions for response and spotter stations, and their answer keys were also prepared (Supplementary file; available at www.nmji.in includes information on blueprinting of assessment, mapping of stations, individual station details along with their marks and time distribution). The checklists were based on stepwise skills in chronological succession. A score was given for each correctly performed skill to complete the task, and finally, the overall score was calculated. The faculty gave constructive feedback based on direct observation at the procedure station.

OSPE for each competency had 10 stations, including procedure station (2–4), response station (3–5), spotter station (1–2) and rest station (2), with 5 marks allotted to each station except the rest stations (2), with a total of 40 marks. Each student was given 5 minutes to complete a task at each OSPE station. After clear instructions, the observation checklists were provided to the examiners for procedure stations. A timekeeper maintained the time and ensured the allotted time was adhered to. The examiners observed each student during the procedure station and allotted marks according to the checklist provided. Students marked their responses for spotter stations on the answer sheets provided to them. At the end of the day, the observers gave feedback to students regarding their performance at the procedure stations.

Data collection process

The 170 students were divided into two groups on the basis of their roll numbers. For competency-1 half the students were assessed through CPE-1 and the other half by OSPE-1. The students then crossed over for a second formative assessment for competency-2. Now the first half were assessed using OSPE-2, and the second half using CPE-2. The same process was repeated for assessing competency-3 and competency-4. Thus, four practical assessments were done for four competencies through both OSPE and CPE with two crossovers over a period of 12 days, with three days for a single competency. Each day’s assessment was limited to 28–29 students each for OSPE and CPE simultaneously.

The mean scores obtained in the assessments were analysed statistically through unpaired t-test.

Feedback

Immediate feedback was given to students by observers for procedure stations.

After conducting the assessments, the feedback questionnaire was distributed to the students after a briefing, underscoring the importance of their honest critical feedback. Feedback was also collected from the faculty involved in conducting OSPE. The data were collected and entered periodically, whenever possible.

Data analysis

Data were entered in a Microsoft Excel spreadsheet, and the analysis was done using Statistical Package for Social Sciences (SPSS) software (IBM manufacturer, Chicago, USA, version 21.0). The scores obtained in both the formative assessments of OSPE and CPE were presented as mean (SD). An independent t-test was used to compare OSPE and CPE. The Bland–Altman plot was constructed to assess the difference in marks of OSPE and CPE. A p value <0.05 was considered statistically significant. Median satisfaction score and satisfaction index were calculated to analyse closed-ended questions on the 5-point Likert Scale.

Thematic analysis was done on open-ended questions from both faculty and students, where themes were identified and tabulated.

RESULTS

An analysis of mean scores obtained by students using the two methods suggested that students scored significantly higher marks in OSPE compared to CPE in all four assessments (Table I).

| Competency | OSPE (n=85) | CPE (n=85) | Total | Mean difference (95% CI) |

|---|---|---|---|---|

| 1. Glucose | 34.8 (3.05) | 30.2 (2.94) | 32.51 (3.77) | 4.6 (3.693–5.507)* |

| 2. Urine | 35.1 (2.74) | 33.0 (2.06) | 34.03 (2.64) | 2.1 (1.372–2.84)* |

| 3. Proteins | 34.5 (3.51) | 30.5 (3.11) | 32.45 (3.87) | 4.0 (3.007–5.016)* |

| 4. Creatinine | 33.2 (3.05) | 30.0 (3.02) | 31.57 (3.42) | 3.2 (2.27–4.107)* |

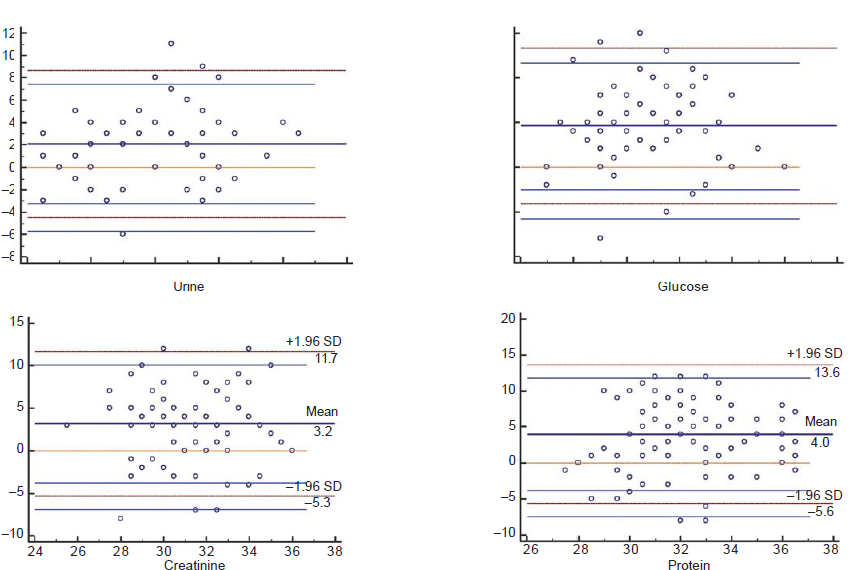

Student performance using the two methods was compared using the Bland–Altman plot (Fig. 1). The limits of agreement were defined as within 1.96 SD of the mean difference. We found an interval of –4.119 to 13.319 for competency-1 (glucose), –4.465 to 8.677 for competency-2 (urine), –5.304 to 11.681 for competency-3 (creatinine) and –5.607 to 13.63 for competency-4 (proteins).

- Bland–Altman plots for comparing students’ performance on objective structured practical examination (OSPE) and conventional practical examination (CPE) in glucose, urine, creatinine and protein, respectively

The visual examination of the plots indicates global agreement between the two assessment methods.

Using the Likert scale, feedback from students and faculty about various aspects of OSPE was analysed. The median satisfaction score reported by the undergraduates was 4.2 (range 3–5). The mean score of each item in the questionnaire ranged from 3.4 (item 8) to 4.6 (item 11). Item 8 stated that OSPE is less stressful than the conventional method of assessment, while item 11 stated that feedback given after OSPE was helpful in clearing all doubts of students. The median satisfaction score of faculty about OSPE using the Likert scale was 5 (range 3–5).

Over 90% of students felt that questions asked in OSPE were relevant to assessing their skills and knowledge. Though 66.4% agreed that adequate time was provided for stations, 24.7% of students also stated that more time could have been given for procedure stations to complete the task. Only 66.5% of students responded that OSPE is less stressful than CPE, whereas more than 70% of students said that OSPE was easier to score marks, was more objective, and they were satisfied with the conduct of OSPE. About 81.2% of students also opined that such types of examinations should be included in other disciplines as well.

All the faculty involved in conducting OSPE felt that it was objective and uniform and helped to assess students’ practical skills better than CPE. They felt both OSPE and CPE should be used. OSPE was helpful for all levels of learners and helped them to score better. They felt that it should also be used routinely for practical assessments as it can serve as a learning tool through feedback given to students, which will help them improve their practical skills. They also agreed that the design of OSPE suited the expectations of the new CBME curriculum.

Themes were also identified for responses to open-ended questions regarding students’ and faculty’s perceptions of OSPE and its shortcomings over CPE (Table III).

| Question | Strongly disagree | Disagree | Can’t say | Agree | Strongly agree | Median satisfaction score | Satisfaction index |

|---|---|---|---|---|---|---|---|

| Questions were relevant to assess | |||||||

| My skills | 1 (0.6) | 3 (1.7) | 6 (3.5) | 71 (41.8) | 89 (52.4) | 4.4 | 89.8 |

| My knowledge | 4 (2.3) | 6 (3.5) | 16 (9.4) | 49 (28.8) | 95 (55.9) | 4.3 | 89.2 |

| Adequate time provided | 6 (3.5) | 36 (21.2) | 15 (8.8) | 64 (37.6) | 49 (28.8) | 3.7 | 74.7 |

| Satisfied with the conduct of OSPE | 4 (2.3) | 28 (16.4) | 7 (4.1) | 25 (14.7) | 106 (62.4) | 4.2 | 84.7 |

| Both OSPE and CPE should be used | 16 (9.4) | 9 (22.4) | 7 (3.5) | 103 (60.9) | 35 (8.8) | 3.8 | 76.2 |

| Satisfied with assessment through OSPE | 8 (4.7) | 23 (13.5) | 12 (7.0) | 59 (34.7) | 68 (40.0) | 3.9 | 79.7 |

| OSPE is easier to score marks | 3 (1.7) | 17 (10.0) | 21 (12.6) | 73 (42.9) | 56 (32.9) | 3.9 | 81.7 |

| OSPE is less stressful than CPE | 21 (18.2) | 29 (17.0) | 17 (10.0) | 61 (35.9) | 42 (30.6) | 3.4 | 69.7 |

| OSPE is more objective than CPE | 6 (3.5) | 18 (10.6) | 2 (1.2) | 53 (31.1) | 91 (53.3) | 4.2 | 84.4 |

| Increased confidence to perform practical tests after OSPE | 4 (2.3) | 14 (8.2) | 5 (2.9) | 54 (31.8) | 93 (54.7) | 4.3 | 86.4 |

| Feedback given after OSPE was helpful | 0 (0.0) | 4 (2.3) | 2 (1.2) | 49 (28.8) | 115 (67.6) | 4.6 | 92.7 |

| OSPE should be used in other subjects | 7 (4.1) | 19 (11.1) | 6 (3.5) | 34 (20.0) | 104 (61.2) | 4.2 | 85.5 |

OSPE objective structured practical examination CPE conventional practical examination

| Core idea | Representative comments | |

|---|---|---|

| Students | Faculty | |

| Q. What are your perceptions regarding OSPE? | ||

| Fair assessment |

‘The assessment was done in an unbiased way’ ‘The assessment was fair and had no scope of partiality.’ ‘I was sure I would be given marks on the basis of my attempt’ ‘The marking scheme for each question allowed fair assessment’ |

‘Checklist used for assessment provided clear grounds for marking’ ‘I was clear where the marks had to be deducted.’ |

| Objective method | ‘Checklist provided clearly eliminated the scope of bias’ | ‘Step-by-step approach to procedure is more helpful to assess practical skills.’ |

| Well organized | ‘It is an objective way of assessment’ ‘No subjective questions were asked as used to be done in previous exams’ ‘Each question had a precise answer and marks allotted likewise’ |

‘To the point answer to a question is convenient for marking.’ ‘Objective evaluation of practical skills is preferable over subjective answers.’ |

| Increased confidence | ‘I liked the way OSPE was conducted in an organised manner’ | ‘More planning is required to design OSPE stations’ |

| Relevant questions | ‘Clear directions were provided at the start of the exam’ ‘Clearly numbered stations avoided the confusion.’ ‘Teachers were available all the time to clear doubts’ |

‘It is a more organised way of assessing practical competencies’ ‘Clear instructions provided at each station helped to conduct OSPE smoothly.’ |

| Lack of adequate time | ‘I feel more confident to do the practical skills after OSPE’ ‘Stepwise approach to the task has increased the confidence to perform task correctly’ ‘Now I am sure how to do this particular task in a correct way’ ‘The questions asked were from within the given topic’ ‘Conventional Practical Exam covered out of the course topics during the viva voce’ ‘I felt more time could have been allotted to complete the procedure stations.’ ‘I could not complete my task at the procedure stations due to inadequate time’ |

‘I could feel that students were more confident about practical skills after OSPE than CPE’ ‘OSPE boosts the confidence levels of students’ ‘Stepwise approach brings more clarity of the objective and helps to build confidence’ ‘Questions were designed relevant to the given competency’ ‘Learning objectives of the given competency were considered in designing OSPE’ ‘Students were given adequate time for each station’ ‘Spotter stations could have been given less time and more time for procedure stations’ |

| Q. What are the shortcomings of OSPE in comparison to CPE? | ||

|

‘I am more comfortable with CPE than OSPE’ ‘Performing in CPE is more relaxing’ ‘Faculty had to be faced only at the end of procedure during viva but in OSPE, continuous monitoring occurs.’ ‘We have to be on our toes continuously in OSPE’ |

‘Lot of planning is required for designing OSPE’ ‘More manpower is required for smooth conduction of OSPE’ ‘More organisation is required for conduction of OSPE’ |

OSPE objective structured practical examination CPE conventional practical examination

DISCUSSION

Assessment is a method that decides the adequacy and effect of exercises considering their goals. In customary strategies for assessment, there are numerous inadequacies. Aside from the performance of students, different components, such as trial factors, instrument conditions and examiner factors, have a role in scoring. Furthermore, singular aptitudes are not assessed and an outcome is observed for scoring. The CPE in biochemistry does not evaluate the psychomotor performance and communication skills of students. Thus, most students are assessed for the cognitive domain and not for the psychomotor or affective domains.7 Moreover, the examination process is time-consuming, where the students are required to perform the practical in the first part followed by a viva voce. Students often complain about the subjectivity of the examination as well as variability in the questions asked in performance exercises which leads to variation in the scores.

To overcome these drawbacks, new models of assessment have been proposed. An earlier innovation in this regard is the objective structured clinical examination (OSCE) later extended to the practical examination (OSPE) described in 1975 and in greater detail in 1979 by Harden and his group from Dundee.8,9 This method, with some modifications has stood the test of time and has largely overcome the problems of CPE mentioned earlier.

OSPE is useful for any subject and its advantage is that both the assessment procedure and the examinee are assessed. It helps to achieve the learning objectives developed for the competencies as laid down in the CBME. The domain assessed is the objective of the station. At the procedure station, the students’ psychomotor skills are assessed. At the response station, cognitive skills are tested. The examiner uses a checklist to record the performance. Standards to check the competencies are decided, and peer-agreed checklists are used for marking and evaluation.10 Students also take more interest and keep themselves alert during the entire assessment procedure. It also integrates teaching and evaluation through the feedback given to students at the end.11 It also has a better discrimination index in spite of its drawbacks such as organizational training of more faculty members who are familiar with competency-based assessments, the requirement of more materials and manpower for its execution, standardization of OSPE stations and checklists in terms of external and internal validity is time demanding and proper planning. Though the organization of OSPE requires teamwork and logistics, at the same time, a large number of students can be tested with standard settings in a short period of time.

OSPE is becoming more popular among medical colleges in India. Electronic objectively structured practical examination (eOSPE) was introduced by Dutta et al.,12 during the Covid-19 pandemic to facilitate the formative assessment. Cherian evolved a more practical method of administering OSPE.13 With advances in computer and software technology, it has now been named computer-assisted OSPE (COSPE).

Since OSPE has been endorsed for the functional evaluation of preclinical and paraclinical subjects, we endeavoured to test its feasibility and agreeableness as a formative assessment tool in biochemistry by contrasting it with CPE and furthermore by obtaining students’ and faculty perceptions for the same.

In our study, 170 undergraduate medical students’ practical skills in the analysis of abnormal constituents of urine, serum creatinine, serum proteins and blood glucose estimation were assessed with OSPE and CPE. The mean score of the students was significantly higher (p<0.0001) in OSPE as compared to CPE in all four practical competencies. A similar study has been reported by Mokkapati et al.10

Better performance in OSPE could be because the scoring, based on established standards of competence and a peer-agreed checklist, is objective. Examiner variability is reduced, which also affects the scoring in CPE. Studies by Rahman et al.14 and Menezes et al.15 also emphasized OSPE as a better assessment technique over traditional methods for measuring the practical skills of MBBS students in Physiology and Forensic Medicine, respectively.

Our study further supports the findings of these earlier studies, as favourable responses were obtained from the students regarding OSPE. Students in our study felt that OSPE assessed their relevant practical skills, and it covered the appropriate knowledge consistent with the learning objectives. A positive approach of students towards OSPE was observed. Interaction between students and teachers also increased, and students felt confident in performing practical skills after covering their areas of weakness. Feedback from students reflected that OSPE improved their practical skills, satisfaction with assessment, and rendered confidence in performing skills. The students appreciated the feedback provided at the end of OSPE and felt it to be an important factor in improving their learning.

Though most students were satisfied with the time given at individual stations, some students found it difficult to manage time at procedure stations and thus demanded more time at these stations, probably due to the lack of practice. This component of our study can be compared with the study done by Manjula et al.16

The lowest satisfaction index of 69.7 by students, for the question that OSPE is less stressful, indicates that examination is a well-known source of stress and anxiety and OSPE can be stressful, especially due to first-time exposure of students to such kind of examination at our institute. This component of our study can also be compared with the study by Manjula et al.16 Feedback from the faculty gave insight into their satisfaction and motivation to adopt OSPE as an assessment tool. In addition, the scoring of students at the procedure stations emphasized the need for a more comprehensive teaching– learning effort. Also, there is a need to formulate multiple OSPE stations with valid structured and standardized checklists to assess the range of psychomotor and cognitive domains, along with continuous faculty development programmes to acquaint them with innovations in medical education and technology.

Limitations

Our study had a small sample size and was not randomized. We did not schedule feedback in the timetable, as it would increase the learning time; however, students were willing to stay back for the feedback as they appreciated its value.

The number of stations for each competency was restricted to 10, containing only 2 observed stations for glucose, protein and creatinine and 3 for abnormal/normal urine, while the rest of the stations were unobserved stations due to lack of time and faculty participation.

A space crunch in the department required OSPE to be conducted for 6 days with 30 students being assessed each day. This required more manpower, resources and time in the limited time available for biochemistry.

Tiredness of evaluators and the students’ concern about their probable negative impression on evaluators due to poor performance were notable.

Implications of the study

We feel that OSPE conducted at the end of the practical competency for formative assessment will improve students’ psychomotor skills. Identifying students’ strengths and weaknesses through such assessment will help improve teaching–learning strategies. As a result students may perform better in the final summative assessment.

Introducing regular formative assessment of knowledge and skills (in all competencies) will make students more competent in the knowledge and skills required during internship. This could result in better patient care and better satisfaction indices from caregivers.

Conclusion

Assessment is a pivotal element of a competency-based curriculum. OSPE is objective, structured, unbiased, and on the whole, less time consuming. It is an effective approach to assessing students’ practical skills and provides a forum for improving both teaching and learning through the immediate feedback given to students. Faculty involved in organizing and conducting OSPE felt that such exercises could be given frequently for formative assessments of students. All the participants were in favour of using OSPE in the future for practical skills sessions in biochemistry and preferred to include OSPE in other subjects as well. However, good assessment requires continuous efforts and innovation, sufficient resources such as manpower and instrumentation, time and proper planning. Thus, OSPE can supplement the existing pattern of CPE.

References

- OSPE in anatomy, physiology and biochemistry practical examinations: Perception of MBBS students. Indian J Clin Anat Physiol. 2016;3:482-4.

- [CrossRef] [Google Scholar]

- Introduction and implementation of OSPE as a tool for assessment and teaching/learning in physiology. Natl J Basic Med Sci. 2019;9:138-42.

- [Google Scholar]

- Study on objective structured practical examination OSPE in histo anatomy for I MBBS and comparison with traditional method. Indian J Appl Res. 2016;6:136-9.

- [Google Scholar]

- A review on objective structured practical examination (OSPE) Dinajpur Med Col J. 2017;10:159-61.

- [Google Scholar]

- Objective structured clinical/practical examination (OSCE/OSPE) J Postgrad Med. 1993;39:82-4.

- [Google Scholar]

- Competency based undergraduate curriculum for the Indian medical graduate. Available at www.nmc.org.in/wp-content/uploads/2020/01/UG-Curriculum-Vol-I.pdf (accessed on 10 Apr 2022).

- [Google Scholar]

- Objective structured practical examination as a tool for evaluating competency in gram staining. J Educ Res Med Teach. 2013;1:48-50.

- [Google Scholar]

- Assessment of clinical competencies using an objective structured clinical examination (OSCE) Med Educ. 1979;13:41-54.

- [CrossRef] [Google Scholar]

- Assessment of clinical competencies using objective structured clinical examination. Br J Med. 1975;1:447-51.

- [CrossRef] [PubMed] [Google Scholar]

- Objective structured practical examination as a formative assessment tool for 2nd MBBS microbiology students. Int J Res Med Sci. 2016;4:4535-40.

- [CrossRef] [Google Scholar]

- Critical analysis of performance of MBBS students using OSPE and TDPE––a comparative study. Nat J Commun Med. 2011;2:322-4.

- [Google Scholar]

- The transition from objectively structured practical examination (OSPE) to electronic OSPE in the era of COVID-19. Biochem Mol Biol Educ. 2020;48:488-9.

- [CrossRef] [PubMed] [Google Scholar]

- COSPE in anatomy: An innovative method of evaluation. Int J Adv Res. 2017;5:325-7.

- [CrossRef] [Google Scholar]

- Evaluation of objective structured practical examination and traditional practical examination. Mymensingh Med J. 2007;16:7-11.

- [CrossRef] [PubMed] [Google Scholar]

- Objective structured practical examination (OSPE) in forensic medicine: Student’s point of view. J Forensic Leg Med. 2011;18:347-9.

- [CrossRef] [PubMed] [Google Scholar]

- Student’s perception on objective structured practical examination in pathology. J Med Educ Res. 2013;1:12-14.

- [Google Scholar]