Translate this page into:

Sample size calculation with simple math for clinical researchers

Corresponding Author:

Kameshwar Prasad

Department of Neurology, Neurosciences Centre, All India Institute of Medical Sciences, Ansari Nagar, New Delhi 110029

India

drkameshwarprasad@gmail.com

| How to cite this article: Prasad K. Sample size calculation with simple math for clinical researchers. Natl Med J India 2020;33:372-374 |

Abstract

Clinicians often need a quick and rough idea of the sample size to assess the feasibility of their clinical research question, but developing countries often lack access to online calculators or its language. I describe a formula that clinicians, residents or any health researcher can remember and use to calculate sample size with mental arithmetic or with the use of a simple pocket calculator. This article covers controlled clinical trials. The formula for two equal-sized groups is simple: n = (16p [100–p])/d2 per group for dichotomous outcomes, where p is average of the two proportions with events, and d is the difference between the two proportions. For continuous scale outcomes, the formula is n=16s2/d2 per group where s is the standard deviation of the outcome data and d is the difference to be detected. The formula needs to be modified for unequal-sized groups. This simple formula may be helpful to clinicians, residents and clinical researchers to calculate sample size for their research questions. The feasibility of many research questions can be easily checked with the calculated sample size.Introduction

Clinicians often ask: how many patients should we include in our study to answer our clinical research question? The usual answer is: consult a statistician, use an online calculator or consult a table of sample size. Clinicians often lack the time and skills to do any of the above; they are too busy to find time to consult a statistician. Even if they do, they often face difficulty in understanding the language of a statistician. Online calculators use a language with terms such as alpha, beta and power. Similarly, even if they get a sample size table, they find it difficult to read it because tables contain terms unfamiliar to them, e.g. alpha and beta. In the absence of a sample size estimate, clinicians are unable to determine whether they would get enough number of patients to answer the clinical research question. A similar question bothers a resident deciding his topic for dissertation. This article aims to describe a formula that clinicians, residents or any health researcher can remember and use to calculate sample size with mental arithmetic or with the use of simple pocket calculator (e.g. in their mobile phones). The sample size calculation depends on the probability of type I error (alpha), power, the difference between the two groups and the variance; however, in most of the studies, probability of type I error is taken as 5% and power as 80% or 90%.[1] To simplify the formula for clinicians who plan an investigator-driven study, I present a formula with 5% alpha and 80% power, which can be committed to memory.

A controlled clinical trial is usually a randomized (sometimes non-randomized) controlled trial with two groups: one receiving the new treatment/intervention and the other receiving the standard treatment (control).

I describe the formula first in the context of planning a controlled clinical trial with two equal-sized groups. Let us consider a clinician planning to determine whether recombinant factor VIIa decreases mortality in patients with hypertensive intracerebral haemorrhage. What should be the sample size? To calculate the sample size, we need to know two things:

- What is the mortality with the current standard treatment (say 60%)=po

- What would (you like or guess) the mortality to be with the use of rFVIIa (say 40%)=pe

- From these two figures, you have to calculate two other figures:

- Average of the two figures (say, p)=(po+pe)/2

- The difference between the two figures (say, d)=po–pe (this should be clinically important and plausible)

Now, we are all set to do the sample size calculation. The formula is simple: sample size required per group: 16p (100–p)/d2. In the example above, p=(60+40)/2=50 and d=(60–40)=20. Thus, the sample size per group=16×50(100–50)/(20)2=(16×50×50)/ (20×20)=100 patients per group, i.e. total sample size 200 patients.

I have used mortality as outcome, only as an example. The formula will work for any event as an outcome. The event may be stroke, myocardial infarction (MI), remission, treatment failure and disability-free survival. The only condition is that the outcome should have only two categories: death/survival, relapse/no relapse, failure/success, stroke/no stroke and MI/ no MI. Such outcomes are called ‘dichotomous’. Hence, the formula is for dichotomous outcomes. To recapitulate the formula for sample size required per group in a comparative study with two equal-sized groups is:

16p(100–p)/d2

where, p=average of event rates (%) in the two groups, and d=difference in the event rates (%) between the two groups.

After calculation for one group, there is a need to multiply it by 2 for total sample size.

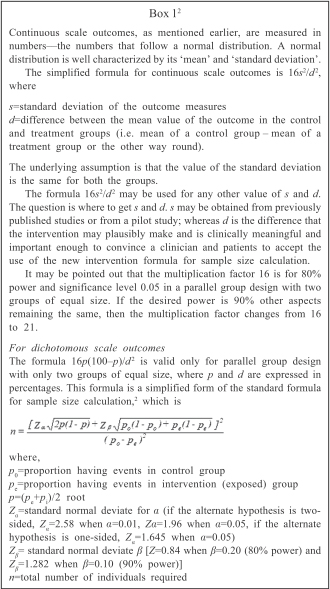

It may be noted that the figures to be used in this formula must be in the form of ‘percentages’ not in decimals. For clinicians, it may be easier to work in percentages than in decimals. For those who prefer to work in decimals, the formula modifies to 16p(1–p)/d2. This is the simplified form of the usual formula given in [Box 1].

Another common type of outcome is expressed in a wide range of numbers such as left ventricular ejection fraction, blood pressure and scale-based scores. Such outcomes are called numerical (may be discrete or continuous) but often referred to simply as continuous. For such an outcome, an easy way to determine sample size is to calculate the size using the formula 16(s2/d2) where s is the standard deviation of the outcome measurement and d is the difference to be detected between the group [Box 1].

Allowance for interim analysis

As a rough guide, the following approach is suggested:[1]

- Plan two interim analyses, not more

- To compensate for this, increase the study size by about 15%

- Use p<0.001 for the first and p<0.01 for the second interim analyses to derive conclusions

- Use p<0.04 for the final analysis to declare statistical significance.

Allowance for losses to follow-up

All attempts should be made to avoid any losses to follow-up because of two reasons:

- Those lost to follow-up are often different in prognostic factors and outcomes from those who come for follow-up. This may be a source of bias in the study.

- The losses reduce the size of sample available for analysis, and this decreases the power of the study.

However, it is rarely possible to avoid losses completely; the reduced power may be avoided by increasing the sample size to compensate for the expected percentage of losses. If this percentage is f%, then the adjust sample size is equal to (100/n)/(100–f %) (if, f is in decimals: n/[1–f]).

Comparison of more than two groups

Controlled clinical trials usually compare two groups. Some trials have three or more groups. For example, in a trial of recombinant factor VIIa, different groups had different doses. In designing a trial with three or more groups, one should decide which pairwise comparisons between groups are important. For each pairwise comparison, the sample size should be calculated using the methods described above, and the largest of the sample size thus obtained should be used for planning the study.

Discussion

The formula presented is simple and may be helpful to clinicians, residents and clinical researchers to calculate sample size for their research questions. With some practice, researchers can remember the formula 16p(100–p)/d2 for dichotomous and 16s2/d2 for continuous outcomes. Feasibility of many research questions can be easily checked with the calculated sample size.

The formula may also motivate researchers to focus their attention on the main parameters required for sample size calculation. This may stimulate thinking about the importance of the difference that is plausible and important with the intervention under consideration. With this formula in mind, clinician researchers will focus on the standard deviation or the percentage with outcomes in the control group while reading the literature.

The formula may not completely obviate the need to consult a statistician, but certainly encourage the clinician researchers to acquire the necessary information before the consultation. For many simple projects, the formula may meet the needs for sample size calculation.

The formula 16s2/d2 or 16p(100–p)/d2 covers only parallel design with two equal-sized groups, with two-tailed hypothesis with α=0.05 and power of 80%. I selected this design as this is the most common design used by clinician researchers. However, if the investigator decides to have 90% power, other aspects remaining the same, she/he needs to substitute 21 in place of 16. The rest of the design parameters remain unchanged. Similarly, for 95% power, 23 replaces 16.

I have purposefully kept the derivation of the formula in the [Box 1]. Clinicians need a simple way to arrive at sample size. Once able to remember and use the formula, they may be curious to know how the formula has been derived and particularly where does 16 come from? It is to satisfy this curiosity that I present the derivation in [Box 1]. If clinicians face a complex formula in the beginning, many of them shut off their mind and do not proceed further for fear of complexity. I think it is better to start with simple explanations, raise curiosity and then present complex things. This strategy may hold their attention till the end.

I do not claim to be the first to simplify the sample size formula. Lehr[3] was probably the first to do this. He presented the simplified formula for dichotomous outcomes as 16p(1–p)/d2. Thus, the average of the event rates must be entered into the formula in decimals, i.e. 20% as 0.2 or 5% as 0.05. Then, the subtraction is from 1, rather than 100. I think clinicians find it easier to deal with percentages. For example, they find it easier to do the subtraction (100–2) than (1–0.02). I have found this from clinicians’ groups in several workshops. Therefore, I have preferred to keep the formula in percentages.

I hope that a broad range of health researchers would find it easy to remember and use the formula presented to calculate the sample size, often with just mental mathematics. I have presented this in many workshops, and participants have found it easy and helpful.

Conflicts of interest. None declared

| 1. | Smith PG, Morrow RH. Study size: Other factors influencing study size. In: Methods for field trial of interventions against tropical diseases: A toolbox. 66th ed. Oxford:Oxford Univerisy Press; 1991. [Google Scholar] |

| 2. | Kelsey KK, Thompson WD, Evans AS. Methods of sampling and estimation of sample size. In: Methods in observational epidemiology. 274th ed. Oxford:Oxford Univerisity Press; 1986. [Google Scholar] |

| 3. | Lehr R. Sixteen S-squared over D-squared: A relation for crude sample size estimates. Stat Med 1992;11:1099–102. [Google Scholar] |

Fulltext Views

9,378

PDF downloads

2,237