Translate this page into:

Establishing links between assessments and expected learning outcomes through curriculum mapping in a dental physiology curriculum

2 Department of Pharmacology and Centre for Cardiovascular Pharmacology, Melaka-Manipal Medical College, Manipal Academy of Higher Education, Manipal, Karnataka, India

3 Formerly Department of Physiology, Kasturba Medical College, Manipal Academy of Higher Education, Manipal, Karnataka, India

4 Department of Physiology, American University School of Medicine Aruba (AUSOMA), Wilhelminastraat 59, Oranjestad, Aruba

Corresponding Author:

Reem Rachel Abraham

Department of Physiology, American University School of Medicine Aruba (AUSOMA), Wilhelminastraat 59, Oranjestad

Aruba

reemabraham@gmail.com

| How to cite this article: Vashe A, Devi V, Rao K R, Abraham RR. Establishing links between assessments and expected learning outcomes through curriculum mapping in a dental physiology curriculum. Natl Med J India 2021;34:40-45 |

Abstract

Background. The relevance of curriculum mapping to determine the links between expected learning outcomes and assessment is well stated in the literature. Nevertheless, studies confirming the usage of such maps are minimal.Methods. We assessed links through curriculum mapping, between assessments and expected learning outcomes of dental physiology curriculum of three batches of students (2012–14) at Melaka-Manipal Medical College (MMMC), Manipal. The questions asked under each assessment method were mapped to the respective expected learning outcomes, and students’ scores in different assessments in physiology were gathered. Students’ (n = 220) and teachers’ (n=15) perspectives were collected through focus group discussion sessions and questionnaire surveys.

Results. More than 75% of students were successful (≥50% scores) in majority of the assessments. There was moderate (r=0.4–0.6) to strong positive correlation (r=0.7–0.9) between majority of the assessments. However, students’ scores in viva voce had a weak positive correlation with the practical examination score (r=0.230). The score in the assessments of problem-based learning had either weak (r=0.1–0.3) or no correlation with other assessment scores.

Conclusions. Through curriculum mapping, we were able to establish links between assessments and expected learning outcomes. We observed that, in the assessment system followed at MMMC, all expected learning outcomes were not given equal weightage in the examinations. Moreover, there was no direct assessment of self-directed learning skills. Our study also showed that assessment has supported students in achieving the expected learning outcomes as evidenced by the qualitative and quantitative data.

Introduction

Assessment drives student learning through the content,[1],[2] information given and its programming (frequency, timing and regulation of student promotions).[3],[4] Students use assessment results as a measure of their own progress.[5] It is also used by different stakeholders to gauge the achievement of competencies by students.[5],[6] The kind of learning activities in which students engage is mainly influenced by the kind of assessment method employed.[6],[7] Teachers can effectively use this feature of assessment to facilitate student learning as students mainly focus on the content appearing in assessments.[8] Thus, the assessment becomes the cornerstone of any curriculum and the principal impetus for students to learn.[5],[6]

With many medical as well as dental schools adopting the outcome-based education (OBE) approach, assessment of students’ progression to the expected learning outcomes gain importance.[9] In OBE, decisions about the curriculum are driven by the outcomes students are expected to attain by the end of a course.[10] As expected learning outcomes are the focus in OBE, assessment of the expected learning outcomes becomes an important part of the curriculum.[5] Therefore, assessments should necessarily reflect the attainment of expected learning outcomes.[10],[11] This needs development and use of appropriate tools to explore the links between expected learning outcomes and assessment methods.

A curriculum map is used as a tool to determine the links between different components of the curriculum (learning opportunities, learning resources, content and assessments) and helps in enhancing transparency of the curriculum.[12] The literature reports the application of curriculum mapping in investigating the extent of inclusion of various topics in undergraduate medical curriculum.[13],[14] Plaza et al. employed curriculum mapping to determine the alignment of intended (institutional and programme requirements), delivered (instructional delivery) and received (experienced) curriculum in a pharmacy programme.[15] Cottrell et al. at Virginia University School of Medicine did mapping of the curriculum to identify how each learning event contributed to the competencies achieved by medical students.[16] There is a paucity of research on the use of curriculum maps in dental curriculum in the Indian context, and to the best of our knowledge, this study is the first to report one such example. This study aimed to establish links between assessments and expected learning outcomes in physiology for an undergraduate dental curriculum using a curriculum map. The following research questions were identified to address this objective.

- Is there a link between various assessment methods in physiology and expected learning outcomes?

- Are the various assessment methods in physiology facilitating students to achieve the expected learning outcomes?

Methods

Study setting

This study was conducted at Melaka-Manipal Medical College (MMMC), Manipal Campus, Manipal Academy of Higher Education, India. MMMC, since 2009, offers the Bachelor of Dental Surgery (BDS) programme, which is offered in twin campuses wherein, students spend their first 2 years at Manipal Campus, India, and the last 3 years at Melaka campus, Malaysia. Every year, around 75 Malaysian students are admitted to the course.

Study design and study sample

The study design used was a mixed method approach. In our study, students of BDS batches 4 (n=66); 5 (n=78); 6 (n=76) (September 2012, September 2013 and October 2014 admissions) and physiology faculty members of MMMC (n=15) were included as study subjects. Ethical clearance was obtained from the Institutional Ethics Committee, Kasturba Medical College and Hospital, Manipal, before commencement of the work (IEC 255/ 2012).

Educational context

Physiology curriculum for the BDS programme is divided into four blocks each of 10-weeks duration, as follows:

- Block 1: Basic concepts, blood and nerve-muscle physiology

- Block 2: Cardiovascular, respiratory and gastrointestinal physiology

- Block 3: Endocrine, reproductive and renal physiology

- Block 4: Central nervous system and special senses.

The expected learning outcomes of physiology curriculum which students have to attain at the end of the 1st year are as follows: Knowledge of basic physiological principles and mechanisms, problem-solving skills, critical thinking skills, self-directed learning (SDL) skills, collaborative learning skills, communication skills and practical skills. These expected learning outcomes were derived from the BDS programme outcomes verified and approved by the Malaysian Qualifying Agency.

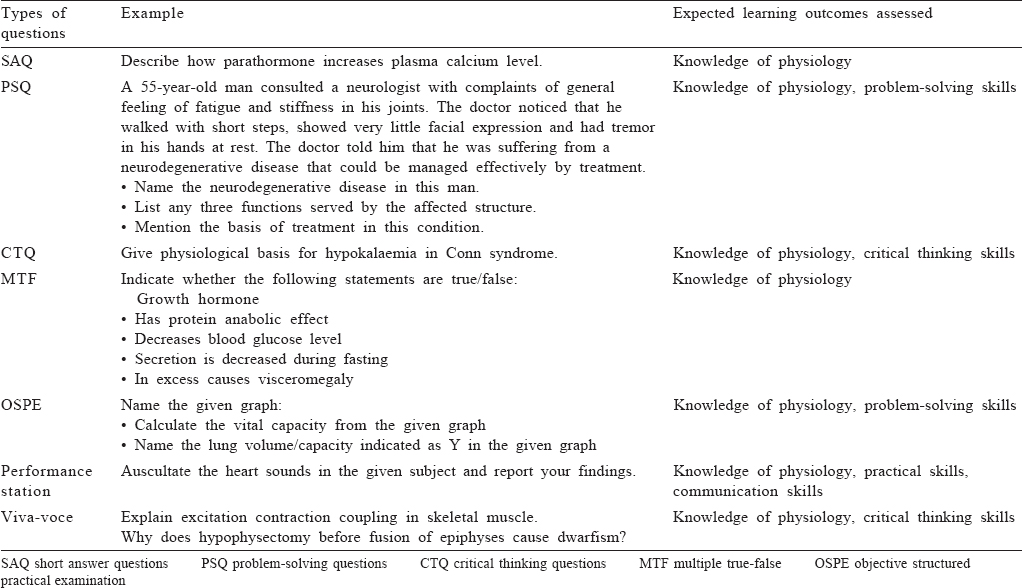

Assessment methods for the study sample included block-end theory, practical and viva voce examinations. Theory examination consisted of essay (40 marks) and multiple true–false questions (60 marks). Essay question paper included short answer questions, problem-solving questions (PSQ), wherein, a case scenario followed by a few questions was provided[17] and critical thinking questions (CTQ) which tested the physiological basis of concepts.[18] The practical examination was conducted in the form of computer-assisted objective structured practical examination (COSPE) and objective structured practical examination (OSPE).[19],[20] In OSPE, of the 11 stations, 10 included questions that tested students’ knowledge, problem-solving and critical thinking. In one station (performance station), students had to perform a given task on a simulated patient before the examiner. In this station, students were assessed using a checklist that used a global rating. In COSPE, 10 PowerPoint slides with a similar pattern of questions as that of OSPE were used, except the performance stations were projected on a screen. Students were exposed to viva voce in the 2nd block wherein questions that tested their knowledge and critical thinking, were asked.

Students experienced a total of four problem-based learning (PBL) sessions in year 1. The assessment of PBL focused on students’ active participation and presentation which was done using a grading system comprising grades from A to E, wherein D and E grades indicated the scores of 5 and the maximum score of 6, respectively.

Data collection

To answer the first research question, test questions under each assessment method were examined, and these were in turn mapped to the respective expected learning outcomes [Table - 1]. In addition to this, the marks allotted to each type of test question were linked to the expected learning outcomes. Students’ scores in each assessment were gathered to confirm the links between assessment and expected learning outcomes. The criteria set were as follows: (i) student pass percentage of 75% in the examinations (≥50% marks); (ii) achievement of either D or E grades in PBL assessment by 75% students; and (iii) moderate-to-strong correlation among the assessment methods with each of expected learning outcome.

To address the second research question, students’ and teachers’ perspectives were collected through separate validated questionnaires. Towards the end of the 1st year, students and teachers were requested to respond to the questionnaires on a 5-point Likert scale (strongly disagree=1 and strongly agree=5). In addition to that, two focus group discussions (FGD) were conducted with every batch of students at the year-end. Semi-structured individual interviews were also conducted among physiology teachers at MMMC at the year-end. Member checking was done at the end of both the FGD as well as interview sessions, to ensure the correctness of data.

Data analysis

The test scores obtained by students were analysed to find out the percentage of students who were successful (≥50% marks) in examinations. In addition, students’ scores on multiple assessments, which were linked to specific outcomes, were correlated using Pearson correlation. The data generated through questionnaires were summarized using frequency and percentage. The qualitative data were analysed using constant comparative analysis,[21] wherein the first author coded and categorized the data, following which all authors contributed to clustering of categories into common themes.

Results

Is there a link between various assessment methods and expected learning outcomes?

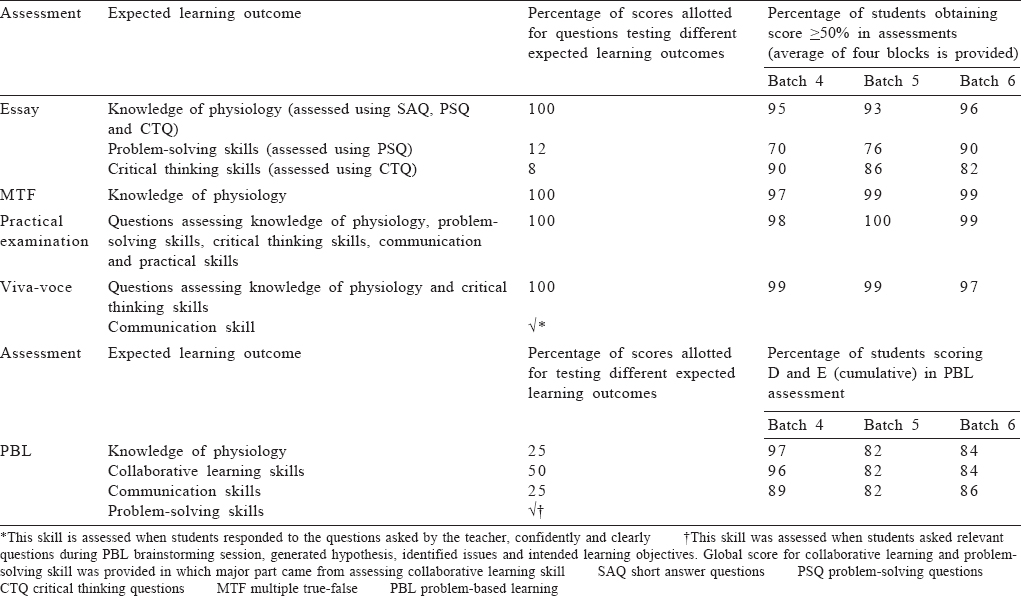

It was observed that a given method of assessment assessed multiple outcomes and majority of the expected learning outcomes were assessed by multiple methods of assessments [Table - 2]. In majority of the examinations, >75% of students were successful (≥50% scores; [Table - 2]). The scores depicted in [Table - 2] are the average scores obtained by students in four blocks except viva voce, which was conducted only in block 2. The scores in PBL assessment were considered from block 3.

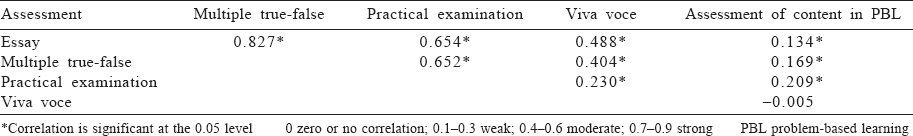

A strong/moderate positive correlation was found between the scores in different assessments which tested knowledge of physiology [Table - 3]. However, students’ scores in viva voce had a weak positive correlation with the practical examination score in block 2. We realized that scores in the PBL topic had weak or no correlation with other assessment scores [Table - 3].

A weak positive correlation was observed between the scores in the PSQ component of the essay and practical examinations (r=0.328). However, scores in the assessment of active participation during PBL did not show any correlation with the PSQ component of the essay examination and also with the practical examination (r=0.006 and r=0.038, respectively). A moderate positive correlation was found between the CTQ component of essay and practical examination (r=0.625). Viva voce correlated weakly but positively with practical examination and CTQ component in essay (0.230 and r=0.355, respectively).

A weak positive correlation was observed between students’ score in PBL presentation session and practical examination (r=0.150) as well as between practical examination and viva voce (r=0.230). However, there was no correlation between assessment of presentation during PBL and viva voce (r=0.040).

Are the various assessment methods facilitating students to achieve the expected learning outcomes?

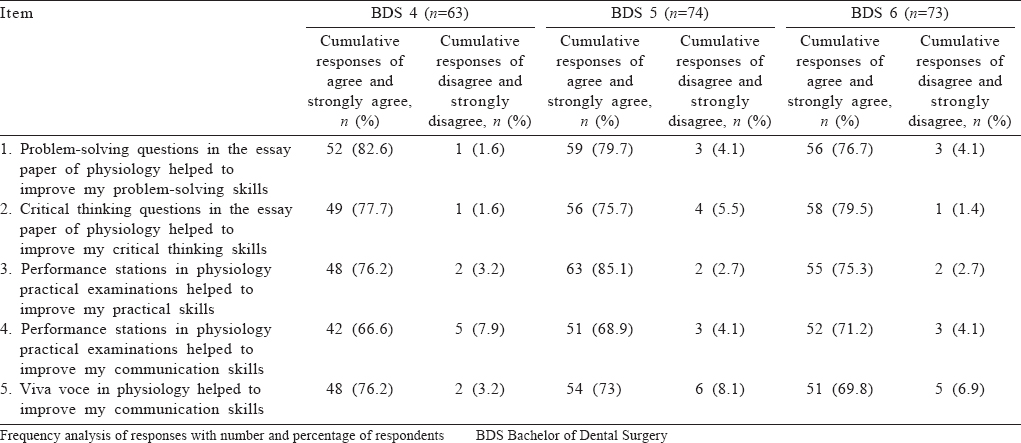

Students’ perception. More than 90% of students responded to the questionnaire. Internal consistency (Cronbach alpha) for the study samples were found to be 0.0820, 0.0829 and 0.0815 for batches 4, 5 and 6, respectively. The majority of students said that assessments were assisting them in achieving the expected learning outcomes [Table - 4].

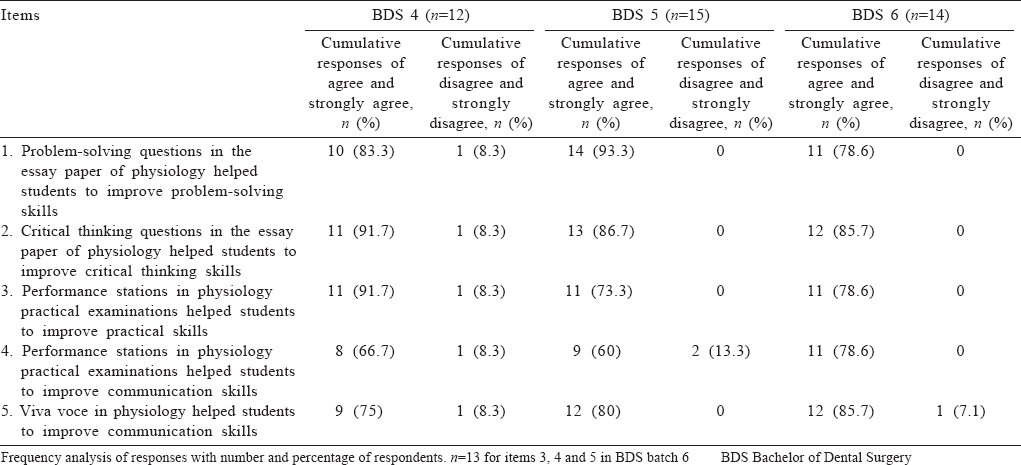

Teachers’ perception. More than 90% of the teachers responded to the questionnaire [Table - 5]. Internal consistency was not calculated as the sample size was small. The majority of teachers viewed assessments as a helpful tool for students to attain the expected learning outcomes.

Qualitative data analysis. The themes that emerged from the analysis of FGD sessions conducted with students and individual interviews with faculty members were: (i) reasons for achieving expected learning outcomes through assessments; (ii) reasons for not achieving the expected learning outcomes through assessments/suggestions to improve the assessments. Students and teachers were of the opinion that assessments in physiology facilitated students to acquire the knowledge and skills.

Reasons for achieving the expected learning outcomes through assessments

Both teachers and students were unanimous in that assessments were in line with the learning objectives.

‘Questions were within the syllabus.' (Student)

‘As we are very specific about learning objectives and

accountability, students can prepare well for the exam.'

(Teacher)

Both felt that the pattern of questions demanded a deeper understanding of the subject. The respondents commented that the challenge involved in solving clinical case and CTQ helped students in improving their problem-solving and critical thinking skills.

‘Without understanding the topics, students can’t answer

the essay questions.' (Teacher)

‘I like cases and physiological basis questions in essay.

They make us think.' (Student)

‘To perform well in the practical examination, we

practiced the skills.' (Student)

The study participants expressed that interaction with the teacher during viva voce and in the performance station of the practical examination improved students’ communication skills.

‘As we had to interact with the teacher face to face in viva,

we studied the content thoroughly and hence understood

the topic well.' (Student)

Reasons for not achieving the expected learning outcomes through assessments/suggestions to improve assessments

Teachers suggested that the quality of question papers could be improved by including more questions at the understanding level. Students were of the opinion that the time given for the performance station was inadequate, and there was less scope for interaction with the teachers and simulated patient. Teachers felt that the number of performance stations could be increased from one to two with increased marks, in addition to increasing the number of viva voce examinations. These findings are supported by the following quotes:

‘I think there are small questions carrying two marks

which are recall type of questions. We can ask describe

instead of mention to assess their understanding of the

topic.' (Teacher)

‘Less time in performance station. We need time to think

and then perform.' (Student)

Discussion

Our study examined whether expected learning outcomes were addressed through assessments and also whether assessments promoted the achievement of expected learning outcomes in an undergraduate dental physiology curriculum. Expected learning outcomes and assessment of such outcomes is of importance as graduating students should be competent enough to treat patients.[5],[22] We found that the dental physiology curriculum used various types of assessments to assess the expected learning outcomes. It was encouraging to note that all expected learning outcomes were assessed, except SDL skills. This finding revealed that even though the curriculum offered ample scope for the facilitation of students’ SDL skills through PBL and other active learning strategies in the teaching activities, assessment of SDL skills is an area that required further attention.

We observed that >75% of students were successful (>50% scores) in majority of the assessments, and it was consistent in all 3 years. Such consistency could be attributed to the alignment of the teaching–learning and assessment processes followed at MMMC. The criteria referred for a good assessment are validity, reliability, impact on the learning and practicality.[5] Bridge et al. suggested that the validity of an assessment method could be determined by matching each question with the learning objectives.[23] In the dental physiology curriculum, the questions asked in the assessments were found to be in line with the learning objectives, and this aspect has been confirmed by students and teachers, who expressed that assessment questions were well aligned to the syllabus. Furthermore, the validity of the information provided by assessments depends on the care that goes into the planning and preparation of the assessments.[24] At MMMC, to ensure that the questions were aligned to the objectives defined in the course and to maintain the standard of question paper, question papers set by the faculty members were scrutinized initially by all faculty members involved in the block, followed by the head of the department of physiology.

Students’ performance was found to be to the expected level when they were tested with PSQs and questions on critical thinking, except for Batch 4 students [Table - 2]. This provides tangible evidence for the alignment of the assessment and expected learning outcomes. The moderate/strong positive correlation between majority of the assessment scores and weak or no correlation between students’ scores in PBL assessments and other assessments could be because the content for PBL was less and majority of the students could perform well which was substantiated by the D and E grades which majority of students obtained [Table - 2]. The weak correlation of students’ scores in PSQ component of essay with their scores in practical examination could be because the score in practical examination represented expected learning outcomes such as knowledge of physiology, critical thinking skills and practical skills. The same observation was seen between CTQ component of essay and viva voce wherein scores of viva voce represented knowledge of physiology, critical thinking skills and communication skills.

The oral examination promotes student learning as it discourages guessing or predicting questions by students and thus encouraging them for an in-depth study of the topic. Moreover, students like to avoid getting embarrassed in front of the teacher, which motivates them to acquire good comprehension of the topic.[25] In our study, students’ positive opinions endorsed this observation. However, some students expressed that viva voce was stressful, which might be a reason for their inability to improve the communication skills through viva voce.

To summarize, multiple assessments that were used to test various learning outcomes allowed students to show the breadth and depth of knowledge through various question patterns (recall and problem-solving). The average pass percentage of students in questions related to generic skills also displayed an acceptable score. It was observed that though there was moderate/strong positive correlation between majority of the assessment scores, there was weak or no correlation between students’ scores in PBL assessments and other assessments. Students’ and teachers’ perceptions reflected a positive response regarding the usefulness of various assessment methods in achieving the expected learning outcomes.

Implications for further research

Based on the evidence from our study, we postulate that cross-institutional curriculum mapping studies could be undertaken to facilitate the collaborative exchange of ideas which would further enhance the quality of curriculum. As mobility of health professional graduates worldwide is on the rise, making the curriculum more transparent and also reaching a consensus on the learning outcomes across various institutions through curriculum mapping seems to be a prudent approach.

Strengths and limitations of the study

A major strength of our study is its methodological rigour, which involved triangulation of data through multiple methods. Second, it is one of the few studies that provides an elaborate description regarding the process of curriculum mapping, using dental physiology curriculum as an example. A major limitation of our study is that a comprehensive relationship between assessments and expected learning outcomes through the mapping process could not be done as some of the assessments provided global score of multiple outcomes as in case of practical examination which assessed knowledge of physiology, problem-solving, critical thinking, communication and practical skills.

Conclusions

Through curriculum mapping, it was possible to establish links between assessments and expected learning outcomes, and it was observed that in the assessment system followed at MMMC, all expected learning outcomes were not given equal weightage in the examination. As physiology is taught in the 1st year of dental curriculum, the knowledge component predominated. In addition, there was no direct assessment of SDL skills. Our study also proved that assessment has supported students in achieving the expected learning outcomes as evidenced by the qualitative and quantitative data. The study also provided scope for obtaining relevant suggestions with respect to various assessment practices.

Conflicts of interest. None declared

| 1. | Scouller K. The influence of assessment method on students’ learning approaches: Multiple choice question examination versus assignment essay. High Educ 1998;35:453–72. [Google Scholar] |

| 2. | Lafleur A, Côté L, Leppink J. Influences of OSCE design on students’ diagnostic reasoning. Med Educ 2015;49:203–14. [Google Scholar] |

| 3. | Van Der Vleuten CP. The assessment of professional competence: Developments, research and practical implications. Adv Health Sci Educ Theory Pract 1996;1:41–67. [Google Scholar] |

| 4. | Schuwirth LW, Van der Vleuten CP. Programmatic assessment: From assessment of learning to assessment for learning. Med Teach 2011;33:478–85. [Google Scholar] |

| 5. | Shumway JM, Harden RM; Association for Medical Education in Europe. AMEE guide no. 25: The assessment of learning outcomes for the competent and reflective physician. Med Teach 2003;25:569–84. [Google Scholar] |

| 6. | Pugh D, Regehr G. Taking the sting out of assessment: Is there a role for progress testing? Med Educ 2016;50:721–9. [Google Scholar] |

| 7. | Guilbert JJ. Educational handbook for health personnel. Geneva:World Health Organization; 1987. [Google Scholar] |

| 8. | Singh T. Basics of assessment. In: Singh TA (ed). Principles of assessments in medical education. New Delhi:Jaypee Brothers Medical Publishers; 2012:1–13. [Google Scholar] |

| 9. | Harden RM. Learning outcomes as a tool to assess progression. Med Teach 2007; 29:678–82. [Google Scholar] |

| 10. | Harden RM, Crosby JR, Davis MH. Outcome based education Part 1: AMEE medical education guide No. 14. Med Teach 1999;21:7–14. [Google Scholar] |

| 11. | Tavakol M, Dennick R. The foundations of measurement and assessment in medical education. Med Teach 2017;39:1010–15. [Google Scholar] |

| 12. | Harden RM. AMEE guide no. 21: Curriculum mapping: A tool for transparent and authentic teaching and learning. Med Teach 2001;23:123–37. [Google Scholar] |

| 13. | Litaker D, Cebul RD, Masters S, Nosek T, Haynie R, Smith CK. Disease prevention and health promotion in medical education: Reflections from an academic health center. Acad Med 2004;79:690–7. [Google Scholar] |

| 14. | Shehata Y, Ross M, Sheikh A. Undergraduate allergy teaching in a UK medical school: Mapping and assessment of an undergraduate curriculum. Prim Care Respir J 2006;15:173–8. [Google Scholar] |

| 15. | Plaza CM, Draugalis JR, Slack MK, Skrepnek GH, Sauer KA. Curriculum mapping in program assessment and evaluation. Am J Pharm Educ 2007;71:20. [Google Scholar] |

| 16. | Cottrell S, Hedrick JS, Lama A, Chen B, West CA, Graham L, et al. Curriculum mapping: A comparative analysis of two medical school models. Med Sci Educ 2016;26:169–74. [Google Scholar] |

| 17. | Singh T. Assessment of knowledge: Written assessment. In: Singh TA (ed). Principles of assessments in medical education. New Delhi:Jaypee Brothers Medical Publishers; 2012:70–9. [Google Scholar] |

| 18. | Abraham R, Ramnarayan K, Kamath A. Validating the effectiveness of clinically oriented physiology teaching (COPT) in undergraduate physiology curriculum. BMC Med Educ 2008;8:40. [Google Scholar] |

| 19. | Torke S, Upadhya S, Abraham RR, Ramnarayan K. Computer-assisted objective-structured practical examination: An innovative method of evaluation. Adv Physiol Educ 2006;30:48–9. [Google Scholar] |

| 20. | Abraham RR, Raghavendra R, Surekha K, Asha K. A trial of the objective structured practical examination in physiology at Melaka Manipal Medical College, India. Adv Physiol Educ 2009;33:21–3. [Google Scholar] |

| 21. | Thorne S. Data analysis in qualitative research. Evid Based Nurs 2000;3:68–70. [Google Scholar] |

| 22. | Wass V, Van der Vleuten C, Shatzer J, Jones R. Assessment of clinical competence. Lancet 2001;357:945–9. [Google Scholar] |

| 23. | Bridge PD, Musial J, Frank R, Roe T, Sawilowsky S. Measurement practices: Methods for developing content-valid student examinations. Med Teach 2003;25:414–21. [Google Scholar] |

| 24. | Gronlund NE. Measurement and evaluation in teaching. New York:Macmillan; 1985. [Google Scholar] |

| 25. | Joughin G. A short guide to oral assessment. Leeds:Leeds Met Press; 2010. [Google Scholar] |

Fulltext Views

2,241

PDF downloads

547